Python爬虫必备技巧详细总结

自定义函数

import requests

from bs4 import BeautifulSoup

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0'}

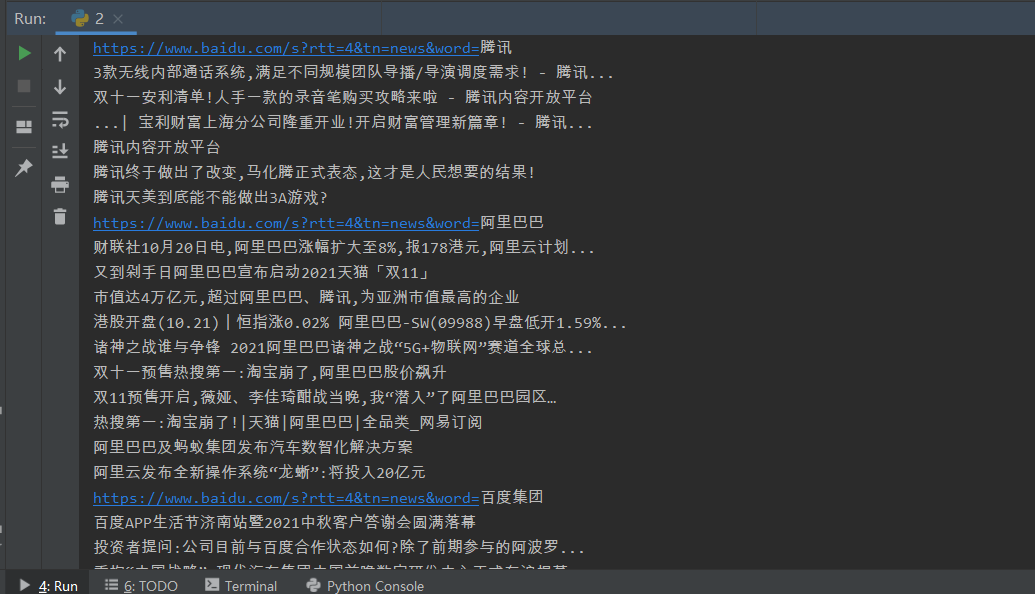

def baidu(company):

url = 'https://www.baidu.com/s?rtt=4&tn=news&word=' + company

print(url)

html = requests.get(url, headers=headers).text

s = BeautifulSoup(html, 'html.parser')

title=s.select('.news-title_1YtI1 a')

for i in title:

print(i.text)

# 批量调用函数

companies = ['腾讯', '阿里巴巴', '百度集团']

for i in companies:

baidu(i)

批量输出多个搜索结果的标题

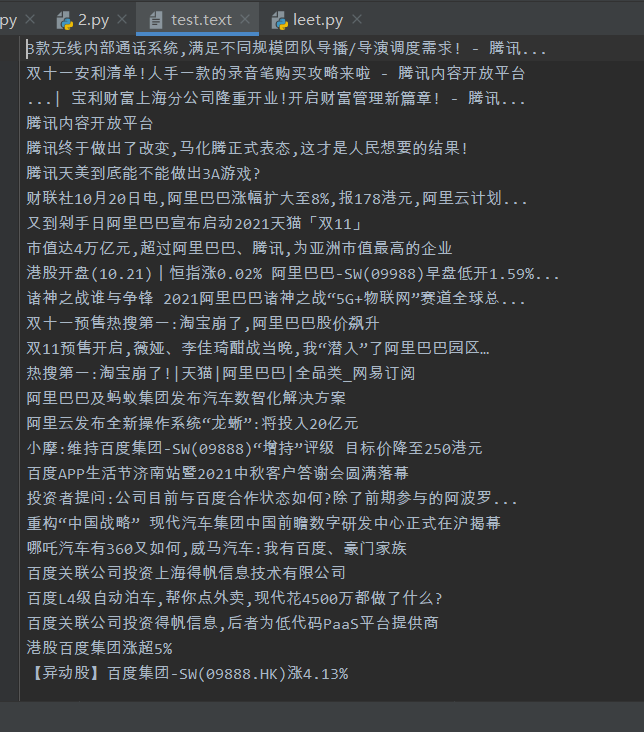

结果保存为文本文件

import requests

from bs4 import BeautifulSoup

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0'}

def baidu(company):

url = 'https://www.baidu.com/s?rtt=4&tn=news&word=' + company

print(url)

html = requests.get(url, headers=headers).text

s = BeautifulSoup(html, 'html.parser')

title=s.select('.news-title_1YtI1 a')

fl=open('test.text','a', encoding='utf-8')

for i in title:

fl.write(i.text + '\n')

# 批量调用函数

companies = ['腾讯', '阿里巴巴', '百度集团']

for i in companies:

baidu(i)

写入代码

fl=open('test.text','a', encoding='utf-8')

for i in title:

fl.write(i.text + '\n')

异常处理

for i in companies:

try:

baidu(i)

print('运行成功')

except:

print('运行失败')

写在循环中 不会让程序停止运行 而会输出运行失败

休眠时间

import time

for i in companies:

try:

baidu(i)

print('运行成功')

except:

print('运行失败')

time.sleep(5)

time.sleep(5)

括号里的单位是秒

放在什么位置 则在什么位置休眠(暂停)

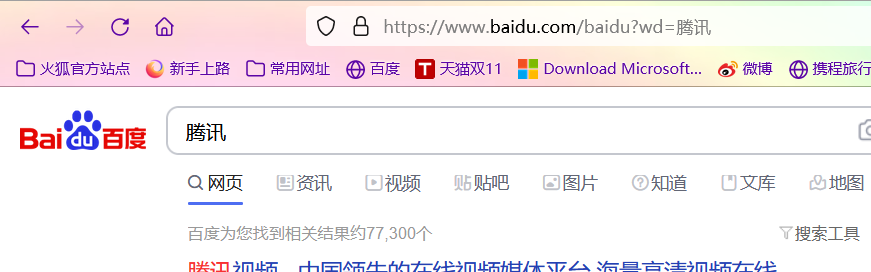

爬取多页内容

百度搜索腾讯

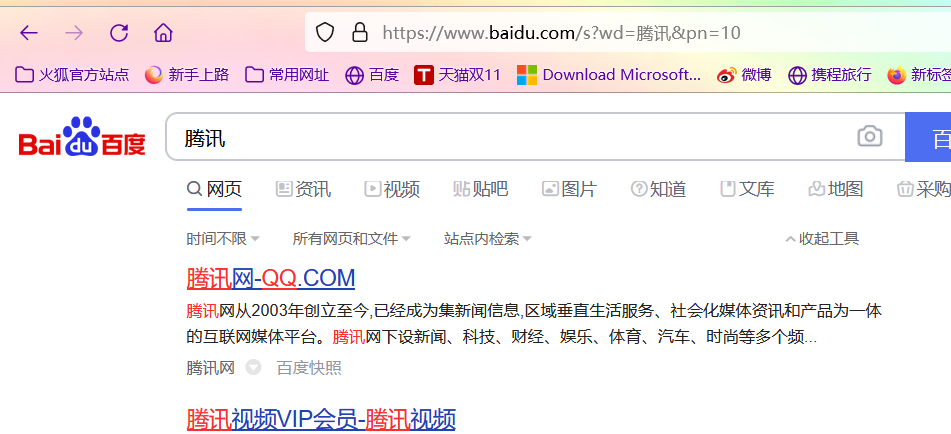

切换到第二页

去掉多多余的

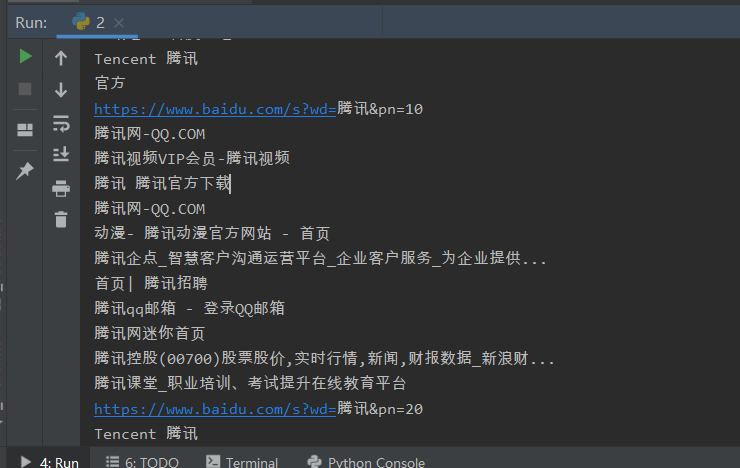

https://www.baidu.com/s?wd=腾讯&pn=10

分析出

https://www.baidu.com/s?wd=腾讯&pn=0 为第一页

https://www.baidu.com/s?wd=腾讯&pn=10 为第二页

https://www.baidu.com/s?wd=腾讯&pn=20 为第三页

https://www.baidu.com/s?wd=腾讯&pn=30 为第四页

..........

代码

from bs4 import BeautifulSoup

import time

headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0'}

def baidu(c):

url = 'https://www.baidu.com/s?wd=腾讯&pn=' + str(c)+'0'

print(url)

html = requests.get(url, headers=headers).text

s = BeautifulSoup(html, 'html.parser')

title=s.select('.t a')

for i in title:

print(i.text)

for i in range(10):

baidu(i)

time.sleep(2)

到此这篇关于Python爬虫必备技巧详细总结的文章就介绍到这了,更多相关Python 爬虫技巧内容请搜索本站以前的文章或继续浏览下面的相关文章希望大家以后多多支持本站!

版权声明:本站文章来源标注为YINGSOO的内容版权均为本站所有,欢迎引用、转载,请保持原文完整并注明来源及原文链接。禁止复制或仿造本网站,禁止在非www.yingsoo.com所属的服务器上建立镜像,否则将依法追究法律责任。本站部分内容来源于网友推荐、互联网收集整理而来,仅供学习参考,不代表本站立场,如有内容涉嫌侵权,请联系alex-e#qq.com处理。

关注官方微信

关注官方微信