一次线上mongo慢查询问题排查处理记录

一、简单介绍

mongo语句查询条件中出现null或空串,可能会导致索引失效,查询优化器无法选择正确的查询计划,出现慢查询引起服务异常

mongo查询的执行计划使用了LRU缓存,在很多种情况下会失效,导致重新选择执行计划并缓存,供后续同类查询直接使用;

服务开始时一直运行正常,当执行计划失效后,恰好出现null值的查询导致选择了错误的执行计划并缓存,后续正常的查询也会出现异常。

二、事件脉络

1、起因

用户中心收到业务方反馈,第三方登录注册出现频繁dubbo调用超时。

org.apache.dubbo.rpc.RpcException: Failed to invoke the method loginWithThird in the service weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService. Tried 1 times of the providers [10.65.5.0:11090] (1/4) from the registry node1.zk.all.platform.wtc.hwhosts.com:2181 on the consumer 10.65.1.81 using the dubbo version 2.7.3-SNAPSHOT. Last error is: loginWithThird_2 failed and fallback failed.

at org.apache.dubbo.rpc.cluster.support.FailoverClusterInvoker.doInvoke(FailoverClusterInvoker.java:113)

at org.apache.dubbo.rpc.cluster.support.AbstractClusterInvoker.invoke(AbstractClusterInvoker.java:248)

at org.apache.dubbo.rpc.cluster.support.wrapper.MockClusterInvoker.invoke(MockClusterInvoker.java:78)

at org.apache.dubbo.rpc.proxy.InvokerInvocationHandler.invoke(InvokerInvocationHandler.java:55)

at org.apache.dubbo.common.bytecode.proxy9.loginWithThird(proxy9.java)

at weli.peanut.user.service.LoginService.loginAndRegister(LoginService.java:684)

at weli.peanut.user.service.LoginService.loginByThirdPartyV2(LoginService.java:629)

at weli.peanut.web.controller.api.UserLoginController.lambda$loginByThirdPartyV2$0(UserLoginController.java:113)

at weli.peanut.common.controller.BaseController.resultForActionWithCat(BaseController.java:71)

at weli.peanut.web.controller.api.UserLoginController.loginByThirdPartyV2(UserLoginController.java:113)

at sun.reflect.GeneratedMethodAccessor2017.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.springframework.web.method.support.InvocableHandlerMethod.doInvoke(InvocableHandlerMethod.java:205)

at org.springframework.web.method.support.InvocableHandlerMethod.invokeForRequest(InvocableHandlerMethod.java:133)

at org.springframework.web.servlet.mvc.method.annotation.ServletInvocableHandlerMethod.invokeAndHandle(ServletInvocableHandlerMethod.java:116)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.invokeHandlerMethod(RequestMappingHandlerAdapter.java:827)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.handleInternal(RequestMappingHandlerAdapter.java:738)

at org.springframework.web.servlet.mvc.method.AbstractHandlerMethodAdapter.handle(AbstractHandlerMethodAdapter.java:85)

at org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:963)

at org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:897)

at org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:970)

at org.springframework.web.servlet.FrameworkServlet.doPost(FrameworkServlet.java:872)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:646)

at org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:846)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:727)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:303)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:208)

at org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:241)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:208)

at org.springframework.web.filter.CharacterEncodingFilter.doFilterInternal(CharacterEncodingFilter.java:197)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:241)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:208)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:220)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:122)

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:501)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:170)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:98)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:116)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:408)

at org.apache.coyote.http11.AbstractHttp11Processor.process(AbstractHttp11Processor.java:1040)

at org.apache.coyote.AbstractProtocol$AbstractConnectionHandler.process(AbstractProtocol.java:607)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.doRun(NioEndpoint.java:1721)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.run(NioEndpoint.java:1679)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: com.netflix.hystrix.exception.HystrixRuntimeException: loginWithThird_2 failed and fallback failed.

at com.netflix.hystrix.AbstractCommand$22.call(AbstractCommand.java:818)

at com.netflix.hystrix.AbstractCommand$22.call(AbstractCommand.java:793)

at rx.internal.operators.OperatorOnErrorResumeNextViaFunction$4.onError(OperatorOnErrorResumeNextViaFunction.java:140)

at rx.internal.operators.OnSubscribeDoOnEach$DoOnEachSubscriber.onError(OnSubscribeDoOnEach.java:87)

at rx.internal.operators.OnSubscribeDoOnEach$DoOnEachSubscriber.onError(OnSubscribeDoOnEach.java:87)

at com.netflix.hystrix.AbstractCommand$DeprecatedOnFallbackHookApplication$1.onError(AbstractCommand.java:1454)

at com.netflix.hystrix.AbstractCommand$FallbackHookApplication$1.onError(AbstractCommand.java:1379)

at rx.internal.operators.OnSubscribeDoOnEach$DoOnEachSubscriber.onError(OnSubscribeDoOnEach.java:87)

at rx.observers.Subscribers$5.onError(Subscribers.java:230)

at rx.internal.operators.OnSubscribeThrow.call(OnSubscribeThrow.java:44)

at rx.internal.operators.OnSubscribeThrow.call(OnSubscribeThrow.java:28)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OnSubscribeDefer.call(OnSubscribeDefer.java:51)

at rx.internal.operators.OnSubscribeDefer.call(OnSubscribeDefer.java:35)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:41)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:30)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:48)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:30)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:48)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:30)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:41)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:30)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:41)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:30)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:48)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:30)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:41)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:30)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:48)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:30)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OperatorOnErrorResumeNextViaFunction$4.onError(OperatorOnErrorResumeNextViaFunction.java:142)

at rx.internal.operators.OnSubscribeDoOnEach$DoOnEachSubscriber.onError(OnSubscribeDoOnEach.java:87)

at rx.internal.operators.OnSubscribeDoOnEach$DoOnEachSubscriber.onError(OnSubscribeDoOnEach.java:87)

at rx.internal.operators.OperatorSubscribeOn$1$1.onError(OperatorSubscribeOn.java:59)

at rx.observers.Subscribers$5.onError(Subscribers.java:230)

at rx.internal.operators.OnSubscribeDoOnEach$DoOnEachSubscriber.onError(OnSubscribeDoOnEach.java:87)

at rx.observers.Subscribers$5.onError(Subscribers.java:230)

at com.netflix.hystrix.AbstractCommand$DeprecatedOnRunHookApplication$1.onError(AbstractCommand.java:1413)

at com.netflix.hystrix.AbstractCommand$ExecutionHookApplication$1.onError(AbstractCommand.java:1344)

at rx.observers.Subscribers$5.onError(Subscribers.java:230)

at rx.observers.Subscribers$5.onError(Subscribers.java:230)

at rx.internal.operators.OnSubscribeThrow.call(OnSubscribeThrow.java:44)

at rx.internal.operators.OnSubscribeThrow.call(OnSubscribeThrow.java:28)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OnSubscribeDefer.call(OnSubscribeDefer.java:51)

at rx.internal.operators.OnSubscribeDefer.call(OnSubscribeDefer.java:35)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:48)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:30)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:48)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:30)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:48)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:30)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OnSubscribeDefer.call(OnSubscribeDefer.java:51)

at rx.internal.operators.OnSubscribeDefer.call(OnSubscribeDefer.java:35)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:41)

at rx.internal.operators.OnSubscribeDoOnEach.call(OnSubscribeDoOnEach.java:30)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:48)

at rx.internal.operators.OnSubscribeLift.call(OnSubscribeLift.java:30)

at rx.Observable.unsafeSubscribe(Observable.java:10151)

at rx.internal.operators.OperatorSubscribeOn$1.call(OperatorSubscribeOn.java:94)

at com.netflix.hystrix.strategy.concurrency.HystrixContexSchedulerAction$1.call(HystrixContexSchedulerAction.java:56)

at com.netflix.hystrix.strategy.concurrency.HystrixContexSchedulerAction$1.call(HystrixContexSchedulerAction.java:47)

at com.netflix.hystrix.strategy.concurrency.HystrixContexSchedulerAction.call(HystrixContexSchedulerAction.java:69)

at rx.internal.schedulers.ScheduledAction.run(ScheduledAction.java:55)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

... 3 more

Caused by: java.lang.RuntimeException: org.apache.dubbo.rpc.RpcException: Invoke remote method timeout. method: loginWithThird, provider: dubbo://10.65.5.0:11090/weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService?anyhost=true&application=peanut-admin&bean.name=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService&check=false&deprecated=false&dubbo=2.0.2&dynamic=true&generic=false&interface=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService&lazy=false&loadbalance=leastactive&methods=logout,sendSms,loginWithVisitor,loginWithThird,loginWithPhone,loginWithThirdBindPhone&pid=1®ister=true®ister.ip=10.65.1.81&release=2.7.3-SNAPSHOT&remote.application=wormhole-api&retries=0&revision=1.1.11-SNAPSHOT&side=consumer&sticky=false&timeout=5000×tamp=1647332146309, cause: org.apache.dubbo.remoting.TimeoutException: Waiting server-side response timeout by scan timer. start time: 2022-03-15 20:33:31.937, end time: 2022-03-15 20:33:36.968, client elapsed: 0 ms, server elapsed: 5031 ms, timeout: 5000 ms, request: Request [id=690506, version=2.0.2, twoway=true, event=false, broken=false, data=RpcInvocation [methodName=loginWithThird, parameterTypes=[class weli.wormhole.rpc.user.center.model.LoginWithThirdRequest, class weli.wormhole.rpc.user.center.model.LoginCommonParam], arguments=[LoginWithThirdRequest(accessToken=54_y3n-YsttdKOklr_X-mbjRgKAKROLDKfx3PV23zAQHmBvmT3zVliFp9mWodHSUEcKgNnJGC4AP_bAOX-2ZSSsfCHfNM5wfjbJDyiQh-sCjFQ, openId=ofrsY6xraPjv9augA9L2kUIB0-Fk, unionId=null, code=null, type=WX, nickName=null, headImgurl=null, sex=null, skipPhoneBind=1), LoginCommonParam(channel=vivo, ip=null, verName=null, platform=null, deviceId=null, imei=null, app=peanut, subApp=peanut, dfId=DUE_59hD2L9BBgukNR3Jv2DjnW00iRtU8z0eRFVFXzU5aEQyTDlCQmd1a05SM0p2MkRqblcwMGlSdFU4ejBlc2h1, idfa=null, mac=null, oaid=null, androidId=null)], attachments={path=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService, interface=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService, version=0.0.0, timeout=5000}]], channel: /10.65.1.81:35450 -> /10.65.5.0:11090

at suishen.esb.hystrix.dubbo.DubboHystrixCommand.run(DubboHystrixCommand.java:93)

at suishen.esb.hystrix.dubbo.DubboHystrixCommand.run(DubboHystrixCommand.java:12)

at com.netflix.hystrix.HystrixCommand$2.call(HystrixCommand.java:301)

at com.netflix.hystrix.HystrixCommand$2.call(HystrixCommand.java:297)

at rx.internal.operators.OnSubscribeDefer.call(OnSubscribeDefer.java:46)

... 26 more

Caused by: org.apache.dubbo.rpc.RpcException: Invoke remote method timeout. method: loginWithThird, provider: dubbo://10.65.5.0:11090/weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService?anyhost=true&application=peanut-admin&bean.name=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService&check=false&deprecated=false&dubbo=2.0.2&dynamic=true&generic=false&interface=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService&lazy=false&loadbalance=leastactive&methods=logout,sendSms,loginWithVisitor,loginWithThird,loginWithPhone,loginWithThirdBindPhone&pid=1®ister=true®ister.ip=10.65.1.81&release=2.7.3-SNAPSHOT&remote.application=wormhole-api&retries=0&revision=1.1.11-SNAPSHOT&side=consumer&sticky=false&timeout=5000×tamp=1647332146309, cause: org.apache.dubbo.remoting.TimeoutException: Waiting server-side response timeout by scan timer. start time: 2022-03-15 20:33:31.937, end time: 2022-03-15 20:33:36.968, client elapsed: 0 ms, server elapsed: 5031 ms, timeout: 5000 ms, request: Request [id=690506, version=2.0.2, twoway=true, event=false, broken=false, data=RpcInvocation [methodName=loginWithThird, parameterTypes=[class weli.wormhole.rpc.user.center.model.LoginWithThirdRequest, class weli.wormhole.rpc.user.center.model.LoginCommonParam], arguments=[LoginWithThirdRequest(accessToken=54_y3n-YsttdKOklr_X-mbjRgKAKROLDKfx3PV23zAQHmBvmT3zVliFp9mWodHSUEcKgNnJGC4AP_bAOX-2ZSSsfCHfNM5wfjbJDyiQh-sCjFQ, openId=ofrsY6xraPjv9augA9L2kUIB0-Fk, unionId=null, code=null, type=WX, nickName=null, headImgurl=null, sex=null, skipPhoneBind=1), LoginCommonParam(channel=vivo, ip=null, verName=null, platform=null, deviceId=null, imei=null, app=peanut, subApp=peanut, dfId=DUE_59hD2L9BBgukNR3Jv2DjnW00iRtU8z0eRFVFXzU5aEQyTDlCQmd1a05SM0p2MkRqblcwMGlSdFU4ejBlc2h1, idfa=null, mac=null, oaid=null, androidId=null)], attachments={path=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService, interface=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService, version=0.0.0, timeout=5000}]], channel: /10.65.1.81:35450 -> /10.65.5.0:11090

at org.apache.dubbo.rpc.protocol.AsyncToSyncInvoker.invoke(AsyncToSyncInvoker.java:63)

at org.apache.dubbo.rpc.listener.ListenerInvokerWrapper.invoke(ListenerInvokerWrapper.java:78)

at com.alibaba.dubbo.rpc.Invoker$CompatibleInvoker.invoke(Invoker.java:55)

at suishen.esb.hystrix.dubbo.DubboHystrixCommand.run(DubboHystrixCommand.java:84)

... 30 more

Caused by: java.util.concurrent.ExecutionException: org.apache.dubbo.remoting.TimeoutException: Waiting server-side response timeout by scan timer. start time: 2022-03-15 20:33:31.937, end time: 2022-03-15 20:33:36.968, client elapsed: 0 ms, server elapsed: 5031 ms, timeout: 5000 ms, request: Request [id=690506, version=2.0.2, twoway=true, event=false, broken=false, data=RpcInvocation [methodName=loginWithThird, parameterTypes=[class weli.wormhole.rpc.user.center.model.LoginWithThirdRequest, class weli.wormhole.rpc.user.center.model.LoginCommonParam], arguments=[LoginWithThirdRequest(accessToken=54_y3n-YsttdKOklr_X-mbjRgKAKROLDKfx3PV23zAQHmBvmT3zVliFp9mWodHSUEcKgNnJGC4AP_bAOX-2ZSSsfCHfNM5wfjbJDyiQh-sCjFQ, openId=ofrsY6xraPjv9augA9L2kUIB0-Fk, unionId=null, code=null, type=WX, nickName=null, headImgurl=null, sex=null, skipPhoneBind=1), LoginCommonParam(channel=vivo, ip=null, verName=null, platform=null, deviceId=null, imei=null, app=peanut, subApp=peanut, dfId=DUE_59hD2L9BBgukNR3Jv2DjnW00iRtU8z0eRFVFXzU5aEQyTDlCQmd1a05SM0p2MkRqblcwMGlSdFU4ejBlc2h1, idfa=null, mac=null, oaid=null, androidId=null)], attachments={path=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService, interface=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService, version=0.0.0, timeout=5000}]], channel: /10.65.1.81:35450 -> /10.65.5.0:11090

at java.util.concurrent.CompletableFuture.reportGet(CompletableFuture.java:357)

at java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1915)

at org.apache.dubbo.rpc.protocol.AsyncToSyncInvoker.invoke(AsyncToSyncInvoker.java:56)

... 33 more

Caused by: org.apache.dubbo.remoting.TimeoutException: Waiting server-side response timeout by scan timer. start time: 2022-03-15 20:33:31.937, end time: 2022-03-15 20:33:36.968, client elapsed: 0 ms, server elapsed: 5031 ms, timeout: 5000 ms, request: Request [id=690506, version=2.0.2, twoway=true, event=false, broken=false, data=RpcInvocation [methodName=loginWithThird, parameterTypes=[class weli.wormhole.rpc.user.center.model.LoginWithThirdRequest, class weli.wormhole.rpc.user.center.model.LoginCommonParam], arguments=[LoginWithThirdRequest(accessToken=54_y3n-YsttdKOklr_X-mbjRgKAKROLDKfx3PV23zAQHmBvmT3zVliFp9mWodHSUEcKgNnJGC4AP_bAOX-2ZSSsfCHfNM5wfjbJDyiQh-sCjFQ, openId=ofrsY6xraPjv9augA9L2kUIB0-Fk, unionId=null, code=null, type=WX, nickName=null, headImgurl=null, sex=null, skipPhoneBind=1), LoginCommonParam(channel=vivo, ip=null, verName=null, platform=null, deviceId=null, imei=null, app=peanut, subApp=peanut, dfId=DUE_59hD2L9BBgukNR3Jv2DjnW00iRtU8z0eRFVFXzU5aEQyTDlCQmd1a05SM0p2MkRqblcwMGlSdFU4ejBlc2h1, idfa=null, mac=null, oaid=null, androidId=null)], attachments={path=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService, interface=weli.wormhole.rpc.user.center.api.ILoginNeedPhoneService, version=0.0.0, timeout=5000}]], channel: /10.65.1.81:35450 -> /10.65.5.0:11090

at org.apache.dubbo.remoting.exchange.support.DefaultFuture.doReceived(DefaultFuture.java:189)

at org.apache.dubbo.remoting.exchange.support.DefaultFuture.received(DefaultFuture.java:153)

at org.apache.dubbo.remoting.exchange.support.DefaultFuture$TimeoutCheckTask.run(DefaultFuture.java:252)

at org.apache.dubbo.common.timer.HashedWheelTimer$HashedWheelTimeout.expire(HashedWheelTimer.java:648)

at org.apache.dubbo.common.timer.HashedWheelTimer$HashedWheelBucket.expireTimeouts(HashedWheelTimer.java:727)

at org.apache.dubbo.common.timer.HashedWheelTimer$Worker.run(HashedWheelTimer.java:449)

... 1 more

2、排查处理

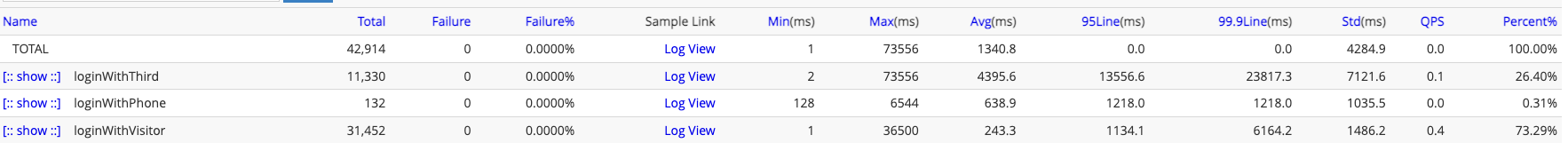

a、查询用户中心cat监控,确认用户中心是否收到请求且正常响应。

当前状态:

正常状态:

对比结果:

用户中心已收到正常请求,排除客户端调用问题;

用户中心当前响应时间异常高出平常的响应时间,判断为用户中心内部业务处理出现问题。

b、查看kibana日志,发现无异常日志;

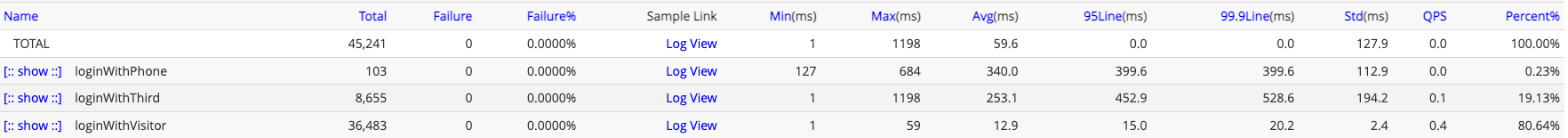

c、使用Arthas诊断工具,监控业务调用链各部分耗时情况;

定位原因为mongo查询慢导致整体业务处理超时。

d、分析mongo查询语句,查看索引情况

public User getUserByDfId(String dfId, String app) {

Criteria criteria = Criteria.where("userBindInfo.dfId").is(dfId).and("app").is(app).and("status").is(1);

return this.mongoTemplate.findOne(Query.query(criteria), User.class);

}

db.getCollection('wormhole_user').getIndexes();

[

{

"v" : 1,

"key" : {

"app" : 1

},

"name" : "app",

"ns" : "wormhole.wormhole_user",

"background" : true

},

{

"v" : 1,

"key" : {

"userBindInfo.dfId" : 1

},

"name" : "userBindInfo.dfId",

"ns" : "wormhole.wormhole_user",

"background" : true

},

... (其余不关注索引已删除)

]

“userBindInfo.dfId”字段存在索引,查询语句也无明显异常。

e、查看华为云后台mongo慢日志

{

"op": "query",

"ns": "wormhole.wormhole_user",

"command": {

"find": "wormhole_user",

"filter": {

"userBindInfo.dfId": "DUQmN3QLpLRwC8PNe1joT_9SDRrzazT4cUc5RFVRbU4zUUxwTFJ3QzhQTmUxam9UXzlTRFJyemF6VDRjVWM1c2h1",

"app": "maybe",

"status": 1

},

"ntoreturn": -1

},

"keysExamined": 1870039,

"docsExamined": 1870039,

"cursorExhausted": true,

"numYield": 14836,

"nreturned": 0,

"locks": {

"Global": {

"acquireCou

通过慢日志发现,mongo并未使用“userBindInfo.dfId”作为索引查询条件,而是使用了“app”作为索引查询条件。

初步认为索引匹配度不够,mongo没正确使用索引。

f、新增联合索引,提高索引匹配度,查询时间恢复正常,接口恢复正常。

db.getCollection("wormhole_user").createIndex({

"userBindInfo.dfId": 1,

"app": 1,

"status": 1

}, {

name: "idx_user_app_userBindInfo.dfId",

background: true

});

3、问题分析

a、explain()语句分析

db.getCollection('wormhole_user').find({"userBindInfo.dfId": "DUqTmV8lQcInjzWtuyJuAntkbRi5_YZ3yHd9RFVxVG1WOGxRY0luanpXdHV5SnVBbnRrYlJpNV9ZWjN5SGQ5c2h1", "app": "maybe", "status": 1}).explain();

{

"queryPlanner" : {

"plannerVersion" : 1,

"namespace" : "wormhole.wormhole_user",

"indexFilterSet" : false,

"parsedQuery" : {

"$and" : [

{

"app" : {"$eq" : "maybe"

}

},

{

"status" : {"$eq" : 1.0

}

},

{

"userBindInfo.dfId" : {"$eq" : "DUqTmV8lQcInjzWtuyJuAntkbRi5_YZ3yHd9RFVxVG1WOGxRY0luanpXdHV5SnVBbnRrYlJpNV9ZWjN5SGQ5c2h1"

}

}

]

},

"winningPlan" : {

"stage" : "FETCH",

"filter" : {

"$and" : [

{"app" : {

"$eq" : "maybe"}

},

{"status" : {

"$eq" : 1.0}

}

]

},

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {

"userBindInfo.dfId" : 1

},

"indexName" : "userBindInfo.dfId",

"isMultiKey" : false,

"multiKeyPaths" : {

"userBindInfo.dfId" : []

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 1,

"direction" : "forward",

"indexBounds" : {

"userBindInfo.dfId" : [ "[\"DUqTmV8lQcInjzWtuyJuAntkbRi5_YZ3yHd9RFVxVG1WOGxRY0luanpXdHV5SnVBbnRrYlJpNV9ZWjN5SGQ5c2h1\", \"DUqTmV8lQcInjzWtuyJuAntkbRi5_YZ3yHd9RFVxVG1WOGxRY0luanpXdHV5SnVBbnRrYlJpNV9ZWjN5SGQ5c2h1\"]"

]

}

}

},

"rejectedPlans" : [

{

"stage" : "FETCH",

"filter" : {

"$and" : [ {

"status" : {

"$eq" : 1.0

}}, {

"userBindInfo.dfId" : {

"$eq" : "DUqTmV8lQcInjzWtuyJuAntkbRi5_YZ3yHd9RFVxVG1WOGxRY0luanpXdHV5SnVBbnRrYlJpNV9ZWjN5SGQ5c2h1"

}}

]

},

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {"app" : 1

},

"indexName" : "app",

"isMultiKey" : false,

"multiKeyPaths" : {"app" : []

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 1,

"direction" : "forward",

"indexBounds" : {"app" : [

"[\"maybe\", \"maybe\"]"]

}

}

},

{

"stage" : "FETCH",

"filter" : {

"$and" : [ {

"app" : {

"$eq" : "maybe"

}}, {

"userBindInfo.dfId" : {

"$eq" : "DUqTmV8lQcInjzWtuyJuAntkbRi5_YZ3yHd9RFVxVG1WOGxRY0luanpXdHV5SnVBbnRrYlJpNV9ZWjN5SGQ5c2h1"

}}, {

"status" : {

"$eq" : 1.0

}}

]

},

"inputStage" : {

"stage" : "AND_SORTED",

"inputStages" : [ {

"stage" : "IXSCAN",

"keyPattern" : {

"app" : 1

},

"indexName" : "app",

"isMultiKey" : false,

"multiKeyPaths" : {

"app" : []

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 1,

"direction" : "forward",

"indexBounds" : {

"app" : [

"[\"maybe\", \"maybe\"]"

]

}}, {

"stage" : "IXSCAN",

"keyPattern" : {

"userBindInfo.dfId" : 1

},

"indexName" : "userBindInfo.dfId",

"isMultiKey" : false,

"multiKeyPaths" : {

"userBindInfo.dfId" : []

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 1,

"direction" : "forward",

"indexBounds" : {

"userBindInfo.dfId" : [

"[\"DUqTmV8lQcInjzWtuyJuAntkbRi5_YZ3yHd9RFVxVG1WOGxRY0luanpXdHV5SnVBbnRrYlJpNV9ZWjN5SGQ5c2h1\", \"DUqTmV8lQcInjzWtuyJuAntkbRi5_YZ3yHd9RFVxVG1WOGxRY0luanpXdHV5SnVBbnRrYlJpNV9ZWjN5SGQ5c2h1\"]"

]

}}

]

}

}

]

},

"serverInfo" : {

"host" : "host-192-168-10-163",

"port" : 27017,

"version" : "4.0.3",

"gitVersion" : "0ead8608f9d151a199b05117bcc79ccb8d5f44a0"

},

"ok" : 1.0,

"operationTime" : Timestamp(1647486920, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1647486920, 2),

"signature" : {

"hash" : { "$binary" : "ylTAtLK6mVpNwDt7PTGwNST/9z4=", "$type" : "00" },

"keyId" : NumberLong(7031512438860152835)

}

}

}

- 发现mongo执行计划选择正确,这正好解释了服务在之前很长的一段时间内都是正常的原因;

- mongo慢日志中的执行计划却选择错误,表明有什么原因导致了mongo执行计划的选择发生了变更。

b、文档查询

- MongoDB 查询优化器会缓存最有效的查询计划,关联的计划缓存条目会用于具有相同查询形状的后续查询;

- 计划缓存会出现刷新:

- MongoDB重启;

- 索引或者集合的删除添加更新等操作;

- 最近最少使用 (LRU) 缓存替换机制清除最近最少访问的缓存条目。

结论:之前使用了计划缓存服务正常,到了某一时刻,计划缓存失效,MongoDB重新选择计划,此时选择了错误的计划并缓存,导致后续查询全部出现问题

c、查询首次出现的慢日志

{

"op":"query",

"ns":"wormhole.wormhole_user",

"command":{

"find":"wormhole_user",

"filter":{

"userBindInfo.dfId":null,

"app":"cybercat",

"status":1

},

"ntoreturn":-1

},

"keysExamined":872550,

"docsExamined":872550,

"fromMultiPlanner":true,

"replanned":true,

"cursorExhausted":true,

"numYield":13785,

"nreturned":0,

"locks":{

"Global":{

"acquireCou

发现查询条件中出现了null值,本地再次分析:

db.getCollection('wormhole_user').find({"userBindInfo.dfId": null, "app": "maybe", "status": 1}).explain();

{

"queryPlanner" : {

"plannerVersion" : 1,

"namespace" : "wormhole.wormhole_user",

"indexFilterSet" : false,

"parsedQuery" : {

"$and" : [

{

"app" : {"$eq" : "maybe"

}

},

{

"status" : {"$eq" : 1.0

}

},

{

"userBindInfo.dfId" : {"$eq" : null

}

}

]

},

"winningPlan" : {

"stage" : "FETCH",

"filter" : {

"$and" : [

{"status" : {

"$eq" : 1.0}

},

{"userBindInfo.dfId" : {

"$eq" : null}

}

]

},

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {

"app" : 1

},

"indexName" : "app",

"isMultiKey" : false,

"multiKeyPaths" : {

"app" : []

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 1,

"direction" : "forward",

"indexBounds" : {

"app" : [ "[\"maybe\", \"maybe\"]"

]

}

}

},

"rejectedPlans" : [

{

"stage" : "FETCH",

"filter" : {

"$and" : [ {

"userBindInfo.dfId" : {

"$eq" : null

}}, {

"app" : {

"$eq" : "maybe"

}}, {

"status" : {

"$eq" : 1.0

}}

]

},

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {"userBindInfo.dfId" : 1

},

"indexName" : "userBindInfo.dfId",

"isMultiKey" : false,

"multiKeyPaths" : {"userBindInfo.dfId" : []

},

"isUnique" : false,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 1,

"direction" : "forward",

"indexBounds" : {"userBindInfo.dfId" : [

"[undefined, undefined]",

"[null, null]"]

}

}

}

]

},

"serverInfo" : {

"host" : "host-192-168-10-163",

"port" : 27017,

"version" : "4.0.3",

"gitVersion" : "0ead8608f9d151a199b05117bcc79ccb8d5f44a0"

},

"ok" : 1.0,

"operationTime" : Timestamp(1647489666, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1647489666, 1),

"signature" : {

"hash" : { "$binary" : "1KJI3aoz2QbOwTKlbkNl9SmWLzw=", "$type" : "00" },

"keyId" : NumberLong(7031512438860152835)

}

}

}

结果与线上表现一致

4、回顾

紧急处理时,直接创建了一个新的索引,导致了计划缓存失效,重新选择计划并缓存,所以服务恢复了正常。

三、总结

- 避免空值的查询;

- 尽可能的使用explain()分析各种不同的查询情况;

- 可以使用mongo提供的PlanCache相关功能,查看计划缓存情况;

- 可使用hint()推荐查询索引。

到此这篇关于一次线上mongo慢查询问题排查处理的文章就介绍到这了,更多相关mongo慢查询问题排查内容请搜索本站以前的文章或继续浏览下面的相关文章希望大家以后多多支持本站!

版权声明:本站文章来源标注为YINGSOO的内容版权均为本站所有,欢迎引用、转载,请保持原文完整并注明来源及原文链接。禁止复制或仿造本网站,禁止在非www.yingsoo.com所属的服务器上建立镜像,否则将依法追究法律责任。本站部分内容来源于网友推荐、互联网收集整理而来,仅供学习参考,不代表本站立场,如有内容涉嫌侵权,请联系alex-e#qq.com处理。

关注官方微信

关注官方微信